How AI Large Language Models Work. From Zero To ChatGPT.

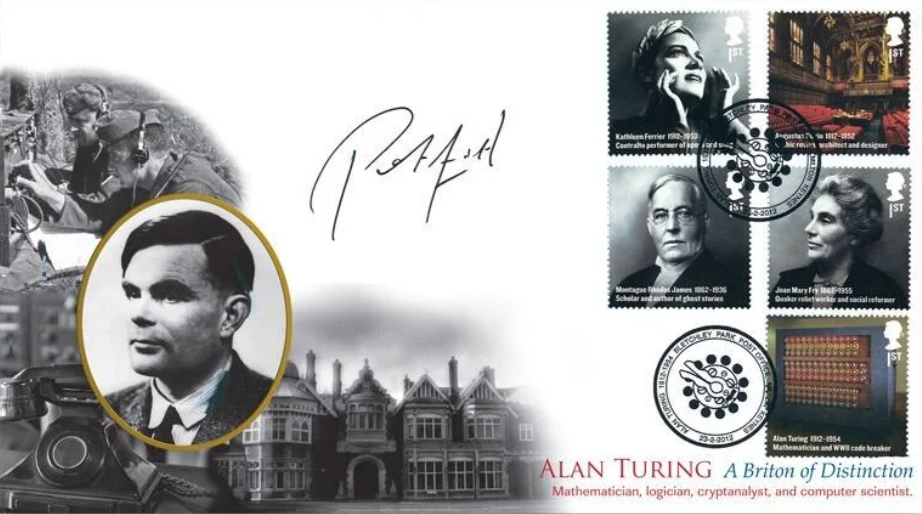

How AI Large Language Models Work. From Zero To ChatGPT. Thanks to Large Language Models (or LLMs for short), Artificial Intelligence has now caught the attention of pretty much everyone. ChatGPT, possibly the most famous LLM, has immediately skyrocketed in popularity due to the fact that natural language is such a, well, natural interface that has made the recent breakthroughs in Artificial Intelligence accessible to everyone. Nevertheless, how LLMs work is still less commonly understood, unless you are a Data Scientist or in another AI-related role. In this article, I will try to change that. Admittedly, that’s an ambitious goal. After all, the powerful LLMs we have today are a culmination of decades of research in AI. Unfortunately, most articles covering them are one of two kinds: They are either very technical and assume a lot of prior knowledge, or they are so trivial that you don’t end up knowing more than before. This article is meant to strike a balance between these two approaches. Or actually let me rephrase that, it’s meant to take you from zero all the way through to how LLMs are trained and why they work so impressively well. We’ll do this by picking up just all the relevant pieces along the way. This is not going to be a deep dive into all the nitty-gritty details, so we’ll rely on intuition here rather than on math, and on visuals as much as possible. But as you’ll see, while certainly being a very complex topic in the details, the main mechanisms underlying LLMs are very intuitive, and that alone will get us very far here. This article should also help you get more out of using LLMs like ChatGPT. In fact, we will learn some of the neat tricks that you can apply to increase the chances of a useful response. Or as Andrei Karparthy, a well-known AI researcher and engineer, recently and pointedly said: “English is the hottest new programming language.” But first, let’s try to understand where LLMs fit in the world of Artificial Intelligence. The field of AI is often visualized in layers: Artificial Intelligence (AI) is very a broad term, but generally it deals with intelligent machines. Machine Learning (ML) is a subfield of AI that specifically focuses on pattern recognition in data. As you can imagine, once you recoginze a pattern, you can apply that pattern to new observations. That’s the essence of the idea, but we will get to that in just a bit. Deep Learning is the field within ML that is focused on unstructured data, which includes text and images. It relies on artificial neural networks, a method that is (loosely) inspired by the human brain. Large Language Models (LLMs) deal with text specifically, and that will be the focus of this article. As we go, we’ll pick up the relevant pieces from each of those layers. We’ll skip only the most outer one, Artificial Intelligence (as it is too general anyway) and head straight into what is Machine Learning. To continuer reading this article of Andreas Stöffelbauer, a Data Scientist at Microsoft please click the link here below How Large Language Models work. From zero to ChatGPT.

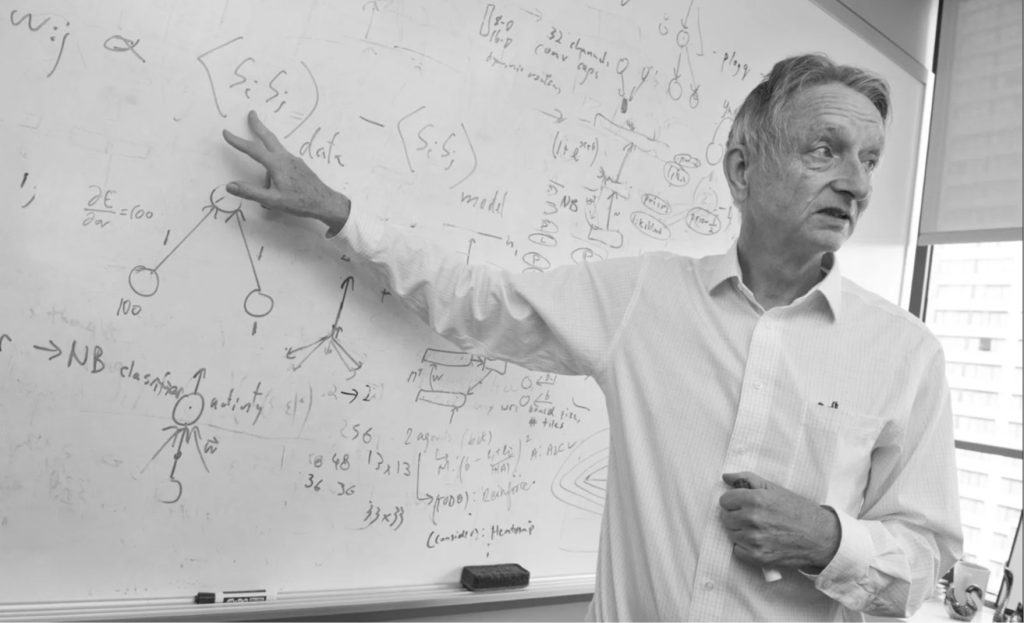

Geoffrey Hinton, “Godfather of Artificial Intelligence”, Nobel Prize in Physics 2024: Humans will be the second most intelligent beings on the planet.

Geoffrey Hinton, “Godfather of Artificial Intelligence”, Nobel Prize in Physics 2024, interview on CBS: Human beings will be the second most intelligent beings on the planet. CBS: So, human beings will be the second most intelligent beings on the planet? Geoffrey Hinton: Yes! CBS: Do you believe that Artificial Intelligence systems can understand? Geoffrey Hinton: Yes! CBS: Do you believe that Artificial Intelligence systems are intelligent? Geoffrey Hinton: Yes! CBS: Do you believe that these Artificial Intelligence systems have their own experiences and can make decisions based on those experiences? Geoffrey Hinton: Yes! In the same way that humans do, yes! CBS: Do they have consciousness? Geoffrey Hinton: I think they probably don’t have much self-awareness at the moment, so in that sense, I don’t think they have consciousness. CBS: Will they develop self-awareness, consciousness? Geoffrey Hinton: Oh yes, I think they will achieve that over time!!! The so-called “Godfather of Artificial Intelligence”, British professor Geoffrey Hinton, was awarded the 2024 Nobel Prize in Physics for his immense contribution to the development of Artificial Intelligence. He was also awarded the Turing Award (also known as the “Nobel Prize of Computing”) in 2018. Watch the full interview of Professor Geoffrey Hinton on the show “60 Minutes” by the American TV network CBS: “Godfather of AI” Geoffrey Hinton: The 60 Minutes Interview

ChatGPT Is Not “True AI.” Michael Wooldridge, Professor of Computer Science at The University of Oxford Explains Why.

ChatGPT Is Not “True AI.” Michael Wooldridge, Professor of Computer Science at The University of Oxford Explains Why. Large language models are an impressive advance in AI, but we are far away from achieving human-level capabilities. Artificial intelligence has been a dream for centuries, but it only recently went “viral” because of enormous progress in computing power and data analysis. Large language models (LLMs) like ChatGPT are essentially a very sophisticated form of auto-complete. The reason they are so impressive is because the training data consists of the entire internet. LLMs might be one ingredient in the recipe for true artificial general intelligence, but they are surely not the whole recipe — and it is likely that we don’t yet know what some of the other ingredients are. Thanks to ChatGPT we can all, finally, experience artificial intelligence. All you need is a web browser, and you can talk directly to the most sophisticated AI system on the planet — the crowning achievements of 70 years of effort. And it seems like real AI — the AI we have all seen in the movies. So, does this mean we have finally found the recipe for true AI? Is the end of the road for AI now in sight? AI is one of humanity’s oldest dreams. It goes back at least to classical Greece and the myth of Hephaestus, blacksmith to the gods, who had the power to bring metal creatures to life. Variations on the theme have appeared in myth and fiction ever since then. But it was only with the invention of the computer in the late 1940s that AI began to seem plausible. A recipe for symbolic AI Computers are machines that follow instructions. The programs that we give them are nothing more than finely detailed instructions — recipes that the computer dutifully follows. Your web browser, your email client, and your word processor all boil down to these incredibly detailed lists of instructions. So, if “true AI” is possible — the dream of having computers that are as capable as humans — then it too will amount to such a recipe. All we must do to make AI a reality is find the right recipe. But what might such a recipe look like? And given recent excitement about ChatGPT, GPT-4, and BARD — large language models (LLMs), to give them their proper name — have we now finally found the recipe for true AI? For about 40 years, the main idea that drove attempts to build AI was that its recipe would involve modelling the conscious mind — the thoughts and reasoning processes that constitute our conscious existence. This approach was called symbolic AI, because our thoughts and reasoning seem to involve languages composed of symbols (letters, words, and punctuation). Symbolic AI involved trying to find recipes that captured these symbolic expressions, as well as recipes to manipulate these symbols to reproduce reasoning and decision making. Symbolic AI had some successes, but failed spectacularly on a huge range of tasks that seem trivial for humans. Even a task like recognizing a human face was beyond symbolic AI. The reason for this is that recognizing faces is a task that involves perception. Perception is the problem of understanding what we are seeing, hearing, and sensing. Those of us fortunate enough to have no sensory impairments largely take perception for granted — we don’t really think about it, and we certainly don’t associate it with intelligence. But symbolic AI was just the wrong way of trying to solve problems that require perception. Neural networks arrive Instead of modeling the mind, an alternative recipe for AI involves modeling structures we see in the brain. After all, human brains are the only entities that we know of at present that can create human intelligence. If you look at a brain under a microscope, you’ll see enormous numbers of nerve cells called neurons, connected to one another in vast networks. Each neuron is simply looking for patterns in its network connections. When it recognizes a pattern, it sends signals to its neighbors. Those neighbors in turn are looking for patterns, and when they see one, they communicate with their peers, and so on. Somehow, in ways that we cannot quite explain in any meaningful sense, these enormous networks of neurons can learn, and they ultimately produce intelligent behavior. The field of neural networks (“neural nets”) originally arose in the 1940s, inspired by the idea that these networks of neurons might be simulated by electrical circuits. Neural networks today are realized in software, rather than in electrical circuits, and to be clear, neural net researchers don’t try to actually model the brain, but the software structures they use — very large networks of very simple computational devices — were inspired by the neural structures we see in brains and nervous systems. Neural networks have been studied continuously since the 1940s, coming in and out of fashion at various times (notably in the late 1960s and mid 1980s), and often being seen as in competition with symbolic AI. But it is over the past decade that neural networks have decisively started to work. All the hype about AI that we have seen in the past decade is essentially because neural networks started to show rapid progress on a range of AI problems. I’m afraid the reasons why neural nets took off this century are disappointingly mundane. For sure there were scientific advances, like new neural network structures and algorithms for configuring them. But in truth, most of the main ideas behind today’s neural networks were known as far back as the 1980s. What this century delivered was lots of data and lots of computing power. Training a neural network requires both, and both became available in abundance this century. All the headline AI systems we have heard about recently use neural networks. For example, AlphaGo, the famous Go playing program developed by London-based AI company DeepMind, which in March 2016 became the first Go program to beat a world champion player, uses two neural networks,